Ryokan Ri

On the day of my Ph.D. graduation ceremony.

About Me

Ryokan Ri (李 凌寒), Ph.D.

A Research Engineer at Google DeepMind, specializing in the development and application of large language models (LLMs). Formerly at SB Intuitions, focusing on developing Japanese-centric models.

My primary research interest is in Natural Language Processing (NLP). Some of my specific research interests include:

- Large language models (LLMs)

- Multilingual models and cross-lingual transfer

- The intersection of linguistics and machine learning

Links: Google Scholar LinkedIn GitHub

Research and Engineering Focus

Large Language Models

LLM Books

I have co-authored books (“Introduction to Large Language Models”) on Large Language Models (LLMs) for Japanese practitioners. These books cover the basics of LLMs with sample code and practical tips for training and fine-tuning LLMs.

Sarashina: Japanese-centric LLM

I was involved in developing the Japanese-centric LLM Sarashina. Sarashina achieved top-class performance in Japanese language tasks among open-sourced LLMs. This was a result of team effort at SB Intuitions.

FlexEval: LLM Evaluation Tool

While many LLM evaluation libraries are available, they cover different domains and methods, and switching between them is cumbersome. I am developing a unified evaluation tool, FlexEval, that can be used to evaluate LLMs with various evaluation tasks, metrics, and methods, ranging from few-shot evaluation to LLM-as-a-judge approaches. It abstracts away various implementations of language models, evaluation datasets, and tasks, allowing users to specify them with configuration files.

Seeking Language Universals in Neural Networks

My Ph.D. research focused on the commonalities across languages captured by neural network models.

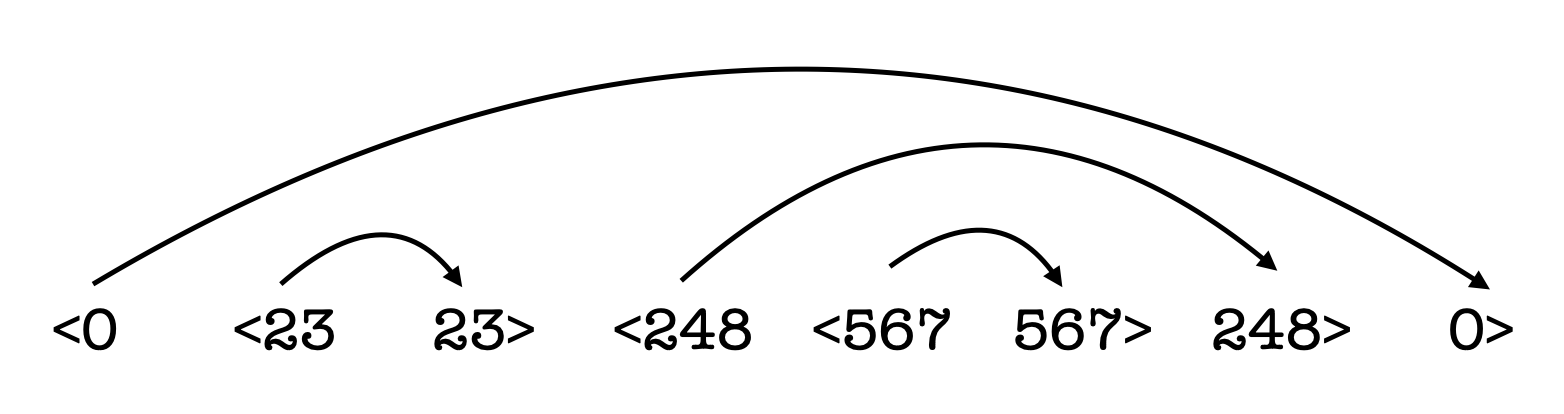

“Pretraining with Artificial Language: Studying Transferable Knowledge in Language Models”, R. Ri and Y. Tsuruoka.

In this work, we investigate the transferability of knowledge acquired through language modeling. Natural language can be characterized by various linguistic or statistical properties at different levels, making it difficult to study which are transferable. We created an artificial language with controlled properties, pretrained a neural network on it, and transferred it to natural languages. Our findings show that simple statistical dependencies are key to transferability.

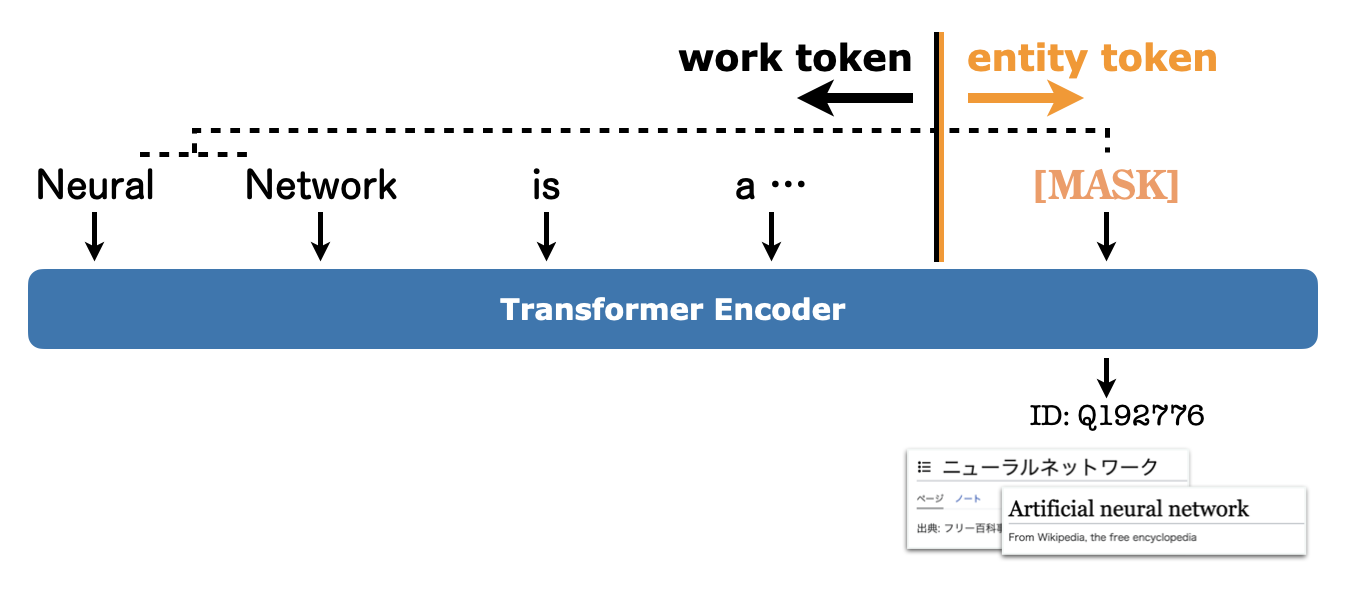

“mLUKE: The Power of Entity Representations in Multilingual Pretrained Language Models”, R. Ri, I. Yamada, and Y. Tsuruoka.

We developed a multilingual encoder-style language model, mLUKE, that utilizes information about entities (Wikipedia articles) shared across languages. We demonstrate that including entity information in pre-training improves the performance of cross-lingual transfer tasks.

Working Experience

- November 2025 - : Research Engineer at Google DeepMind

- April 2023 - November 2025: Research Engineer at SB Intuitions ← LY Corporation ← LINE

- Transitioned through company merger and internal transfer

- April 2020 - March 2023: Research intern at Studio Ousia in Tokyo, Japan

- July 2020 - September 2020: Research intern at IBM Research - Ireland (Remote)

Education

- April 2018 - : Tsuruoka Lab., Graduate School of Information Science and Technology, The University of Tokyo

- April 2020 - March 2023: Ph.D. student

- April 2018 - March 2020: Master’s student

- April 2013 - March 2018: College of Arts an Sciences, The University of Tokyo

- April 2015 - March 2018: Bachelor of Arts (linguistics)

- June 2016 - June 2017: The University of Sydney (Student Exchange)

- April 2013 - March 2015: Natural Science Ⅰ

Awards and Honors

- Research Paper Award from The Association for Natural Language Processing, 2023.

- Best Paper Award (2 out of 579) from The Association for Natural Language Processing in NLP 2023 (Japanese domestic conference).

- Microsoft Research PhD Fellowship 2021 Nomination Award.

- Language Resource Award from The Association for Natural Language Processing in NLP 2021 (Japanese domestic conference).

Publications

International Conferences / Journals

For the full publication list, please refer to Google Scholar.

Analyzing Multilinguality of Neural Networks

- R. Ri and Y. Tsuruoka, “Pretraining with Artificial Language: Studying Transferable Knowledge in Language Models”, in Proceedings of the 60th ACL (long), 2022. [anthology] [arxiv]

- R. Ri and Y. Tsuruoka, “Revisiting the Context Window for Cross-lingual Word Embeddings”, in Proceedings of the 58th ACL (long), 2020. [anthology] [arXiv]

Multilingual Language Models and Representation Learning

- I. Yamada and R. Ri, “LEIA: Facilitating Cross-Lingual Knowledge Transfer in Language Models with Entity-based Data Augmentation”, 2024. [anthology] [arxiv]

- R. Ri, I. Yamada, and Y. Tsuruoka, “mLUKE: The Power of Entity Representations in Multilingual Pretrained Language Models”, in Proceedings of the 60th ACL (long), 2022. [anthology] [arxiv]

- S. Nishikawa, R. Ri, I. Yamada, Y. Tsuruoka, and I. Echizen, “EASE: Entity-Aware Contrastive Learning of Sentence Embedding”, in Proceedings of NAACL, 2022. [anthology] [arxiv]

Book

- I. Yamada, M. Suzuki, S. Nishikawa, K. Fujii, K. Yamada, R. Ri, “大規模言語モデル入門 Ⅱ 〜生成型LLMの実装と評価 (Introduction to Large Language Models Ⅱ)”, Gijutsu-Hyohron Co., Ltd., 2024. [Amazon]

- I. Yamada, M. Suzuki, K. Yamada, R. Ri, “大規模言語モデル入門 (Introduction to Large Language Models)”, Gijutsu-Hyohron Co., Ltd., 2023. [Amazon]

Skills

Natural Languages

- Japanese (Native)

- English (Fluent)

- Chinese (Fluent)

Programming Languages

- Python (Advanced)

- C++ (Intermediate)

- C (Intermediate)

- Java (Basic)

- Kotlin (Basic)

Fun Facts

- ⛴ Licensed as a boat captain in Japan.

- 🎥 Run a YouTube channel with over 100,000 subscribers, focusing on linguistics and language in general.